Robustness- refers to how tough the data is and how well it can deal with various inputs and if it can detect and mitigate errors well. This is very important as you as a programmer don’t want data that easily fails apart and you also want one that can make your job easier and detect and handle data in a data-set.

Readability- refers to how well you can read the text and how well the layout has been made.It’s very important when your programming code is readable, this is because when you or someone else is reviewing the code it is best if it’s comprehensible so any errors that may appear can seen noticed easier, and also, if the code is cluttered then looking through it for errors or if you want to make changes will take much longer.

Portability- refers to how well the data can be used in various OS computers and environments.This aspect is very important as you may be working on something that has to be used by another company that may or may not be in another country, so you have to make sure that another OS, computer and company can understand and use your code.

Maintainability- refers to how easy it is to maintain the code. This is also highly important as you will encounter issues throughout programming since it’s part of the process of making good code, but once you’re done bugs and errors will pop up that you may or may not have seen before, having easily maintainable data means that you can just go in and quickly find out the issue and resolve it, this also refers to how easy it is to edit the code and process too.

Code Quality Metrics

Qualitative Code Quality Metrics

Qualitative metrics are subjective measurements that aim to better define what good means.

Extensibility

Extensibility is the degree to which software is coded to incorporate future growth. The central theme of extensible applications is that developers should be able to add new features to code or change existing functionality without it affecting the entire system.

Maintainability

Code maintainability is a qualitative measurement of how easy it is to make changes, and the risks associated with such changes.

Developers can make judgments about maintainability when they make changes — if the change should take an hour but it ends up taking three days, the code probably isn’t that maintainable.

Another way to gauge maintainability is to check the number of lines of code in a given software feature or in an entire application. Software with more lines can be harder to maintain.

Readability and Code Formatting

Readable code should use indentation and be formatted according to standards particular to the language it’s written in; this makes the application structure consistent and visible.

Comments should be used where required, with concise explanations given for each method. Names for methods should be meaningful in the sense that the names indicate what the method does.

Well-tested

Well tested programs are likely to be of higher quality, because much more attention is paid to the inner workings of the code and its impact on users. Testing means that the application is constantly under scrutiny.

Quantitative Code Quality Metrics

There are a few quantitative measurements to reveal well written applications:

Weighted Micro Function Points

This metric is a modern software sizing algorithm that parses source code and breaks it down into micro functions. The algorithm then produces several complexity metrics from these micro functions, before interpolating the results into a single score.

WMFP automatically measures the complexity of existing source code. The metrics used to determine the WMFP value include comments, code structure, arithmetic calculations, and flow control path.

Halstead Complexity Measures

The Halstead complexity measures were introduced in 1977. These measures include program vocabulary, program length, volume, difficulty, effort, and the estimated number of bugs in a module. The aim of the measurement is to assess the computational complexity of a program. The more complex any code is, the harder it is to maintain and the lower its quality.

Cyclomatic Complexity

Cyclomatic complexity is a metric that measures the structural complexity of a program. It does so by counting the number of linearly independent paths through a program’s source code. Methods with high cyclomatic complexity (greater than 10) are more likely to contain defects.

With cyclomatic complexity, developers get an indicator of how difficult it will be to test, maintain, and troubleshoot their programming. This metric can be combined with a size metric such as lines of code, to predict how easy the application will be to modify and maintain.

Code Quality Metrics: The Business Impact

From a business perspective, the most important aspects of code quality are those that most significantly impact on software ROI. Software maintenance consumes 40 to 80 percent of the average software development budget. A major challenge in software maintenance is understanding the existing code, and this is where code quality metrics can have a big impact.

Consequently, quality code should always be:

- Easy to understand (readability, formatting, clarity, well-documented)

- Easy to change (maintainability, extensibility)

Tools to maintain the code quality

SonarQube

A more sophisticated analysis tool than the ones used in the first two steps, SonarQube digs deeper into the code and examines several metrics of code complexity. This allows the developers to understand your software better.

Coverage.py

This tool measures code coverage, showing the parts of the source code tested for errors. Ideally, 100% of the code is checked, but 80-90% is a healthy percentage.

Linters

Used for static analysis of the source code, linters serve as primary indicators of potential issues with the code. PyLint is a popular choice for Python, while ESLint is used for JavaScript.

Collabolator

According to many rankings it is clearly a leader among all the tools. Collaborator is the most comprehensive peer code review tool, built for teams working on projects where code quality is critical.

Codebrag

It is an free and open-source tool. It is famous for its simplicity . Codebrag is used to solve issues like non-blocking code review, inline comments & likes, smart email notifications etc. What’s more it helps in delivering enhanced software using its agile code review.

Gerrit

Gerrit is a web based code review system, facilitating online code reviews for projects using the Git version control system. Gerrit makes reviews easier by showing changes in a side-by-side display, and allowing inline comments to be added by any reviewer.

Dependency Managers

First what is a dependency? A Dependency is an external standalone program module (library) that can be as small as a single file or as large as a collection of files and folders organized into packages that performs a specific task. For example, backup-mongodb is a dependency for a blog application that uses it for remotely backing up its database and sending it to an email address. In other words, the blog application is dependent on the package for doing backups of its database.

Dependency managers are software modules that coordinate the integration of external libraries or packages into larger application stack. Dependency managers use configuration files like composer.json, package.json, build.gradle or pom.xml to determine:

- What dependency to get

- What version of the dependency in particular and

- Which repository to get them from.

A repository is a source where the declared dependencies can be fetched from using the the name and version of that dependency. Most dependency managers have dedicated repositories where they fetch the declared dependencies from. For example, maven central for maven and gradle, npmfor npm, and packagist for composer.

So when you declare a dependency in your config file — e.g. composer.json, the manager will goto the repository to fetch the dependency that match the exact criteria you have set in the config file and make it available in your execution environment for use.

Example of Dependency Managers

- Composer (used with php projects)

- Gradle (used with Java projects including android apps. and also is a build tool)

- Node Package Manager (NPM: used with Nodejs projects)

- Yarn

- Maven (used with Java projects including android apps. and also is a build tool)

Why do I need Dependency Managers

Summing them up in two points:

- They make sure the same version of dependencies you used in dev environment is what is being used in production. No unexpected behaviours

- They make keeping your dependencies updated with latest patch, release or major version very easy.

Role of dependency/package management tools in software development

Dependency management tools move the responsibility of managing third-party libraries from the code repository to the automated build. Typically dependency management tools use a single file to declare all library dependencies, making it much easier to see all libraries and their versions at once.

Different dependency/package management tools used in industry

Functions in dependency/package management tools in software development. A software package is an archive file containing a computer program as well as necessary metadata for its deployment. The computer program can be in source code that has to be compiled and built first.Package metadata include package description, package version, and dependencies (other packages that need to be installed beforehand). Package managers are charged with the task of finding, installing, maintaining or uninstalling software packages upon the user’s command. Typical functions of a package management system include:

• Working with file archivers to extract package archives

• Ensuring the integrity and authenticity of the package by verifying their digital certificates and checksums

• Looking up, downloading, installing or updating existing software from a software repository or app store

• Grouping packages by function to reduce user confusion

• Managing dependencies to ensure a package is installed with all packages it requires, thus avoiding “dependency hell”

Dependency management tools move the responsibility of managing third-party libraries from the code repository to the automated build. Typically dependency management tools use a single file to declare all library dependencies, making it much easier to see all libraries and their versions at once.

Build tool…

Build tools are programs that automate the creation of executable applications from source code(eg. .apk for android app). Building incorporates compiling,linking and packaging the code into a usable or executable form.

Basically build automation is the act of scripting or automating a wide variety of tasks that software developers do in their day-to-day activities like:

- Downloading dependencies.

- Compiling source code into binary code.

- Packaging that binary code.

- Running tests.

- Deployment to production systems.

Why do we use build tools or build automation?

In small projects, developers will often manually invoke the build process. This is not practical for larger projects, where it is very hard to keep track of what needs to be built, in what sequence and what dependencies there are in the building process. Using an automation tool allows the build process to be more consistent.

The role of build automation in build tools indicating the need for build automation

Build Automation is the process of scripting and automating the retrieval of software code from a repository, compiling it into a binary artifact, executing automated functional tests, and publishing it into a shared and centralized repository.

Compare and contrast different build tools used in industry

Ant with Ivy

Ant was the first among “modern” build tools. In many aspects it is similar to Make. It was released in 2000 and in a short period of time became the most popular build tool for Java projects. It has very low learning curve thus allowing anyone to start using it without any special preparation. It is based on procedural programming idea.

After its initial release, it was improved with the ability to accept plug-ins.

Major drawback was XML as the format to write build scripts. XML, being hierarchical in nature, is not a good fit for procedural programming approach Ant uses. Another problem with Ant is that its XML tends to become unmanageably big when used with all but very small projects.

Later on, as dependency management over the network became a must, Ant adopted Apache Ivy.

Main benefit of Ant is its control of the build process.

Maven was released in 2004. Its goal was to improve upon some of the problems developers were facing when using Ant.

Maven continues using XML as the format to write build specification. However, structure is diametrically different. While Ant requires developers to write all the commands that lead to the successful execution of some task, Maven relies on conventions and provides the available targets (goals) that can be invoked. As the additional, and probably most important addition, Maven introduced the ability to download dependencies over the network (later on adopted by Ant through Ivy). That in itself revolutionized the way we deliver software.

However, Maven has its own problems. Dependencies management does not handle conflicts well between different versions of the same library (something Ivy is much better at). XML as the build configuration format is strictly structured and highly standardized. Customization of targets (goals) is hard. Since Maven is focused mostly on dependency management, complex, customized build scripts are actually harder to write in Maven than in Ant.

Maven configuration written in XML continuous being big and cumbersome. On bigger projects it can have hundreds of lines of code without actually doing anything “extraordinary”.

Main benefit from Maven is its life-cycle. As long as the project is based on certain standards,with Maven one can pass through the whole life cycle with relative ease. This comes at a cost of flexibility.

In the mean time the interest for DSLs (Domain Specific Languages) continued increasing. The idea is to have languages designed to solve problems belonging to a specific domain. In case of builds, one of the results of applying DSL is Gradle.

Gradle combines good parts of both tools and builds on top of them with DSL and other improvements. It has Ant’s power and flexibility with Maven’s life-cycle and ease of use. The end result is a tool that was released in 2012 and gained a lot of attention in a short period of time. For example, Google adopted Gradle as the default build tool for the Android OS.

Gradle does not use XML. Instead, it had its own DSL based on Groovy (one of JVM languages). As a result, Gradle build scripts tend to be much shorter and clearer than those written for Ant or Maven. The amount of boilerplate code is much smaller with Gradle since its DSL is designed to solve a specific problem: move software through its life cycle, from compilation through static analysis and testing until packaging and deployment.

Initially, Gradle used Apache Ivy for its dependency management. Later own it moved to its own native dependency resolution engine.

Gradle effort can be summed as “convention is good and so is flexibility”.

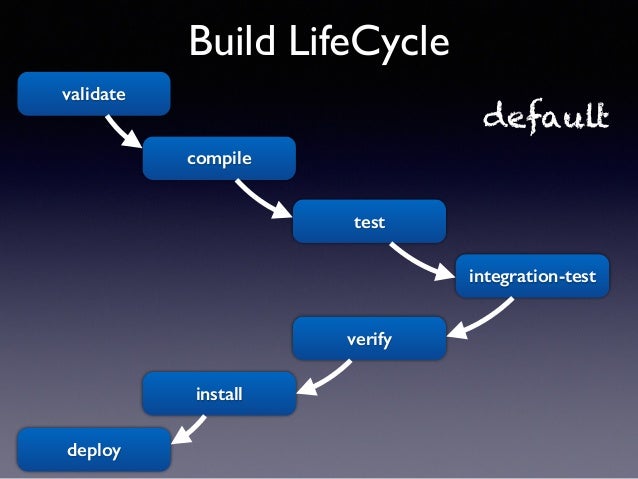

Build life cycle

Maven is based around the central concept of a build lifecycle. What this means is that the process for building and distributing a particular artifact (project) is clearly defined.

For the person building a project, this means that it is only necessary to learn a small set of commands to build any Maven project, and the POM will ensure they get the results they desired.

There are three built-in build lifecycles: default, clean and site. The default lifecycle handles your project deployment, the clean lifecycle handles project cleaning, while the site lifecycle handles the creation of your project’s site documentation.

A Build Lifecycle is Made Up of Phases

Each of these build lifecycles is defined by a different list of build phases, wherein a build phase represents a stage in the lifecycle.

For example, the default lifecycle comprises of the following phases

- validate – validate the project is correct and all necessary information is available

- compile – compile the source code of the project

- test – test the compiled source code using a suitable unit testing framework. These tests should not require the code be packaged or deployed

- package – take the compiled code and package it in its distributable format, such as a JAR.

- verify – run any checks on results of integration tests to ensure quality criteria are met

- install – install the package into the local repository, for use as a dependency in other projects locally

- deploy – done in the build environment, copies the final package to the remote repository for sharing with other developers and projects.

These lifecycle phases (plus the other lifecycle phases not shown here) are executed sequentially to complete the default lifecycle. Given the lifecycle phases above, this means that when the default lifecycle is used, Maven will first validate the project, then will try to compile the sources, run those against the tests, package the binaries (e.g. jar), run integration tests against that package, verify the integration tests, install the verified package to the local repository, then deploy the installed package to a remote repository.

What is Maven…

Maven, a Yiddish word meaning accumulator of knowledge, was originally started as an attempt to simplify the build processes in the Jakarta Turbine project. There were several projects each with their own Ant build files that were all slightly different and JARs were checked into CVS. We wanted a standard way to build the projects, a clear definition of what the project consisted of, an easy way to publish project information and a way to share JARs across several projects.

The result is a tool that can now be used for building and managing any Java-based project. We hope that we have created something that will make the day-to-day work of Java developers easier and generally help with the comprehension of any Java-based project.

How Maven uses conventions over configurations …

Maven uses Convention over Configuration, which means developers are not required to create build process themselves.When a Maven project is created,Maven creates default project structure. Developer is only required to place files accordingly and he/she need not to define any configuration in pom.xml.

Build phases, build life cycle, build profile, and build goal in Maven

A Build Lifecycle is a well-defined sequence of phases, which define the order in which the goals are to be executed. Here phase represents a stage in life cycle. As an example, a typical Maven Build Lifecycle consists of the following sequence of phases.

| Phase | Handles | Description |

|---|---|---|

| prepare-resources | resource copying | Resource copying can be customized in this phase. |

| validate | Validating the information | Validates if the project is correct and if all necessary information is available. |

| compile | compilation | Source code compilation is done in this phase. |

| Test | Testing | Tests the compiled source code suitable for testing framework. |

| package | packaging | This phase creates the JAR/WAR package as mentioned in the packaging in POM.xml. |

| install | installation | This phase installs the package in local/remote maven repository. |

| Deploy | Deploying | Copies the final package to the remote repository. |

Maven Build Lifecycle

The Maven build follows a specific life cycle to deploy and distribute the target project.

There are three built-in life cycles:

- default: the main life cycle as it’s responsible for project deployment

- clean: to clean the project and remove all files generated by the previous build

- site: to create the project’s site documentation

Maven Goal

Each phase is a sequence of goals, and each goal is responsible for a specific task.

When we run a phase – all goals bound to this phase are executed in order.

Here are some of the phases and default goals bound to them:

- compiler:compile – the compile goal from the compiler plugin is bound to the compile phase

- compiler:testCompile is bound to the test-compile phase

- surefire:test is bound to test phase

- install:install is bound to install phase

- jar:jar and war:war is bound to package phase

We can list all goals bound to a specific phase and their plugins using the command:

| 1 | mvn help:describe -Dcmd=PHASENAME |

For example, to list all goals bound to the compile phase, we can run:

| 1 | mvn help:describe -Dcmd=compile |

And get the sample output:

| 12 | compile' is a phase corresponding to this plugin:org.apache.maven.plugins:maven-compiler-plugin:3.1:compile |

How Maven manages dependency/packages and build life cycle

Best Practice – Using a Repository Manager

A repository manager is a dedicated server application designed to manage repositories of binary components. The usage of a repository manager is considered an essential best practice for any significant usage of Maven.

Purpose

A repository manager serves these essential purposes:

- act as dedicated proxy server for public Maven repositories

- provide repositories as a deployment destination for your Maven project outputs

Use the POM

A Project Object Model or POM is the fundamental unit of work in Maven. It is an XML file that contains information about the project and configuration details used by Maven to build the project. It contains default values for most projects.

Contemporary tools and practices widely used in the software industry

1. Bower

The package management system Bower runs on NPM which seems a little redundant but there is a difference between the two, notably that NPM offers more features while Boweraims for a reduction in filesize and load times for frontend dependencies.

2. NPM

Each project can use a package.json file setup through NPM and even managed with Gulp(on Node). Dependencies can be updated and optimized right from the terminal. And you can build new projects with dependency files and version numbers automatically pulled from the package.json file.NPM is valuable for more than just dependency management, and it’s practically a must-know tool for modern web development. If you’re confused please check out this Reddit thread for a beginner’s explanation.

3. RubyGems

RubyGems is a package manager for Ruby with a high popularity among web developers. The project is open source and inclusive of all free Ruby gems.To give a brief overview for beginners, a “gem” is just some code that runs on a Ruby environment. This can lead to programs like Bundler which manage gem versions and keep everything updated.

4. RequireJS

There’s something special about RequireJS in that it’s primarily a JS toolset. It can be used for loading JS modules quickly including Node modules.RequireJS can automatically detect required dependencies based on what you’re using so this might be akin to classic software programming in C/C++ where libraries are included with further libraries.

5. Jam

Browser-based package management comes in a new form with JamJS. This is a JavaScript package manager with automatic management similar to RequireJS.All your dependencies are pulled into a single JS file which lets you add and removeitems quickly. Plus these can be updated in the browser regardless of other tools you’re using (like RequireJS).

6. Browserify

Most developers know of Browserify even if it’s not part of their typical workflow. This is another dependency management tool which optimizes required modules and libraries by bundling them together.These bundles are supported in the browser which means you can include and merge modules with plain JavaScript. All you need is NPM to get started and then Browserify to get moving.

7. Mantri

Still in its early stages of growth, MantriJS is a dependency system for mid-to-high level web applications. Dependencies are managed through namespaces and organized functionally to avoid collisions and reduce clutter.

8. Volo

The project management tool volo is an open source NPM repo that can create projects, add libraries, and automate workflows.Volo runs inside Node and relies on JavaScript for project management. A brief intro guide can be found on GitHub explaining the installation process and common usage. For example if you run the command volo create you can affix any library like HTML5 Boilerplate.

9. Ender

Ender is the “no-library library” and is one of the lightest package managers you’ll find online. It allows devs to search through JS packages and install/compile them right from the command line. Ender is thought of as “NPM’s little sister” by the dev team.

10. pip

The recommended method for installing Python dependencies is through pip. This tool was created by the Python Packaging Authority and it’s completely open source just like Python itself