What is the need for VCS?

Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later. For the examples in this book, you will use software source code as the files being version controlled, though in reality you can do this with nearly any type of file on a computer.

Differentiate the three models of VCSs, stating their pros and cons

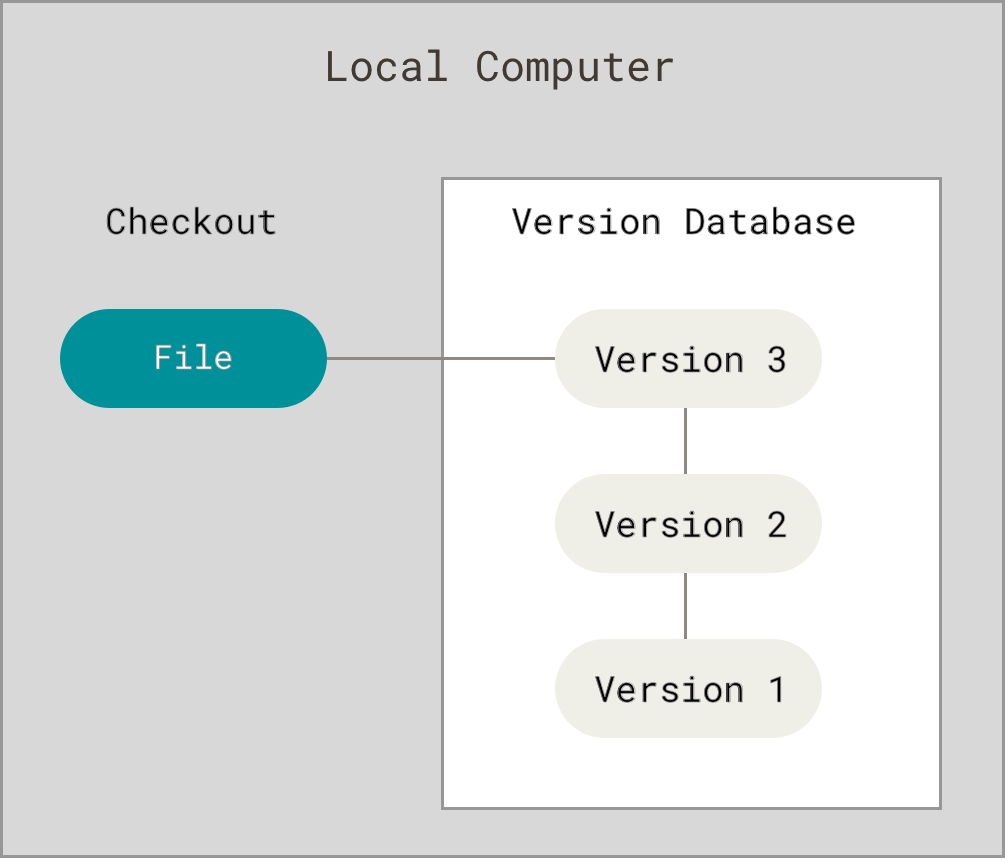

- Local version control systems

Some benefits of Local version control systems:

- Everything is in your Computer

Disadvantages of Local version control systems:

- Cannot be used for collaborative software development

2. Centralized Version Control Systems

Centralized Version Control Systems were developed to record changes in a central system and enable developers to collaborate on other systems. Centralized Version Control Systems have a lot to offer, however they also have some serious disadvantages.

Some benefits of Centralized Version Control Systems:

- Relatively easy to set up

- Provides transparency

- Enable admins control the workflow

Disadvantages of Centralized Version Control Systems:

- If the main server goes down, developers can’t save versioned changes

- Remote commits are slow

- Unsolicited changes might ruin development

- If the central database is corrupted, the entire history could be lost (security issues)

3.Distributed Version Control Systems

Distributed Version Control Systems (DVCSs) don’t rely on a central server. They allow developers to clone the repository and work on that version. Develops will have the entire history of the project on their own hard drives.

It is often said that when working with Distributed Version Control Systems, you can’t have a single central repository. This is not true! Distributed Version Control Systems don’t prevent you from having a single “central” repository. They just provide you with more options.

Advantages of DVCS over CVCS:

- Because of local commits, the full history is always available

- No need to access a remote server (faster access)

- Ability to push your changes continuously

- Saves time, especially with SSH keys

- Good for projects with off-shore developers

Downsides of Distributed Version Control Systems:

- It may not always be obvious who did the most recent change

- File locking doesn’t allow different developers to work on the same piece of code simultaneously. It helps to avoid merge conflicts, but slows down development

- DVCS enables you to clone the repository – this could mean a security issue

- Managing non-mergeable files is contrary to the DVCS concept

- Working with a lot of binary files requires a huge amount of space, and developers can’t do diffs

Git and GitHub, are they same or different? Discuss with facts.

Git is a revision control system, a tool to manage your source code history. GitHub is a hosting service for Git repositories. So they are not the same thing: Git is the tool, GitHub is the service for projects that use Git.

Git is indeed a VCS (Version Control System – just like SVN and Mercurial). You can use GitHub to host Git projects (as well as some others, like BitBucket or GitLab you mentioned). You can, in addition to Git (which is a command-line based software), also use a GUI frontend for Git (like GitKraken you mentioned).

Personally, I find the GitHub GUI (in the browser) in combination with the Git command line utility more than enough to manage everything, but I can imagine that you would like to have things a bit more clearly layed-out in a GUI. I’ve tested out GitKraken and it is good software. Saying it is just a ‘tiny commercial project not worth looking at, and only looking for money’ is pretty short-sighted, I think. It is excellent software, but you’ll have to pay for it. If you don’t have the money or don’t want to spend the money, GitHub Desktop is a very good alternative.

Compare and contrast the Git commands, commit and push

Basically git commit “records changes to the repository” while git push “updates remote refs along with associated objects”. So the first one is used in connection with your local repository, while the latter one is used to interact with a remote repository.

Here is a nice picture from Oliver Steele, that explains the git model and the commands:

Discuss the use of staging area and Git directory

The Git directory is where Git stores the metadata and object database for your project. This is the most important part of Git, and it is what is copied when you clone a repository from another computer.

The staging area is a simple file, generally contained in your Git directory, that stores information about what will go into your next commit. It’s sometimes referred to as the index, but it’s becoming standard to refer to it as the staging area.

The basic Git workflow goes something like this:

- You modify files in your working directory.

- You stage the files, adding snapshots of them to your staging area.

- You do a commit, which takes the files as they are in the staging area and stores that snapshot permanently to your Git directory.

Explain the collaboration workflow of Git, with example

A Git Workflow is a recipe or recommendation for how to use Git to accomplish work in a consistent and productive manner. Git workflows encourage users to leverage Git effectively and consistently. Git offers a lot of flexibility in how users manage changes. Given Git’s focus on flexibility, there is no standardized process on how to interact with Git. When working with a team on a Git managed project, it’s important to make sure the team is all in agreement on how the flow of changes will be applied. To ensure the team is on the same page, an agreed upon Git workflow should be developed or selected. There are several publicized Git workflows that may be a good fit for your team. Here, we’ll be discussing some of these workflow options.

The array of possible workflows can make it hard to know where to begin when implementing Git in the workplace. This page provides a starting point by surveying the most common Git workflows for software teams.

As you read through, remember that these workflows are designed to be guidelines rather than concrete rules. We want to show you what’s possible, so you can mix and match aspects from different workflows to suit your individual needs.

Discuss the benefits of CDNs

- Media and Advertising: In media, CDNs enhance the performance of streaming content to a large degree by delivering latest content to end users quickly. We can easily see today, there is a growing demand for online video, and real time audio/video and other media streaming applications. This demand is leveraged by media, advertising and digital content service providers by delivering high quality content efficiently for users. CDNs accelerate streaming media content such as breaking news, movies, music, online games and multimedia games in different formats. The content is made available from the datacenter which is nearest to users’ location.

- Business Websites: CDNs accelerate the interaction between users and websites, this acceleration is highly essential for corporate businesses. In websites speed is one important metric and a ranking factor. If a user is far away from a website the web pages will load slowly. Content delivery networks overcome this problem by sending requested content to the user from the nearest server in CDN to give the best possible load times, thus speeding the delivery process.

- Education: In the area of online education CDNs offer many advantages. Many educational institutes offer online courses that require streaming video/audio lectures, presentations, images and distribution systems. In online courses students from around the world can participate in the same course. CDN ensures that when a student logs into a course, the content is served from the nearest datacenter to the student’s location. CDNs support educational institutes by steering content to regions where most of the students reside.

- E-Commerce: E-commerce companies make use of CDNs to improve their site performance and making their products available online. According to Computer World, CDN provides 100% uptime of e-commerce sites and this leads to improved global website performance. With continuous uptime companies are able to retain existing customers, leverage new customers with their products and explore new markets, to maximize their business outcomes.

How CDNs differ from web hosting servers?

- Web Hosting is used to host your website on a server and let users access it over the internet. A content delivery network is about speeding up the access/delivery of your website’s assets to those users.

- Traditional web hosting would deliver 100% of your content to the user. If they are located across the world, the user still must wait for the data to be retrieved from where your web server is located. A CDN takes a majority of your static and dynamic content and serves it from across the globe, decreasing download times. Most times, the closer the CDN server is to the web visitor, the faster assets will load for them.

- Web Hosting normally refers to one server. A content delivery network refers to a global network of edge servers which distributes your content from a multi-host environment.

Identify free and commercial CDNs

A Content Delivery Network (CDN) is a globally distributed network of web servers whose purpose is to provide faster delivery, and highly available content. The content is replicated throughout the CDN so it exists in many places all at once. A client accesses a copy of the data near to the client, as opposed to all clients accessing the same central server, in order to avoid bottlenecks near that server.

Discuss the requirements for virtualization

Hardware and software requirements for virtualization also can vary based on the operating system and other software you need to run. You generally must have a fast enough processor, enough RAM and a big enough hard drive to install the system and application software you want to run, just as you would if you were installing it directly on your physical machine.

You’ll generally need slightly more resources to comfortably run software within a virtual machine, because the virtual machine software itself will consume some of your resources. If you’re not sure whether your computer can comfortably run a given workload under a virtualization scenario, you may want to check with the makers of the virtualization software and the operating system and apps you must run.

Discuss and compare the pros and cons of different virtualization techniques in different levels

The advantages of switching to a virtual environment are plentiful, saving you money and time while providing much greater business continuity and ability to recover from disaster.

- Reduced spending: For companies with fewer than 1,000 employees, up to 40 percent of an IT budget is spent on hardware. Purchasing multiple servers is often a good chunk of this cost. Virtualizing requires fewer servers and extends the lifespan of existing hardware. This also means reduced energy costs.

- Easier backup and disaster recovery: Disasters are swift and unexpected. In seconds, leaks, floods, power outages, cyber-attacks, theft and even snow storms can wipe out data essential to your business. Virtualization makes recovery much swifter and accurate, with less manpower and a fraction of the equipment – it’s all virtual.

- Better business continuity: With an increasingly mobile workforce, having good business continuity is essential. Without it, files become inaccessible, work goes undone, processes are slowed and employees are less productive. Virtualization gives employees access to software, files and communications anywhere they are and can enable multiple people to access the same information for more continuity.

DISADVANTAGES OF VIRTUALIZATION

The disadvantages of virtualization are mostly those that would come with any technology transition. With careful planning and expert implementation, all of these drawbacks can be overcome.

- Upfront costs: The investment in the virtualization software, and possibly additional hardware might be required to make the virtualization possible. This depends on your existing network. Many businesses have sufficient capacity to accommodate the virtualization without requiring a lot of cash. This obstacle can also be more readily navigated by working with a Managed IT Services provider, who can offset this cost with monthly leasing or purchase plans.

- Software licensing considerations: This is becoming less of a problem as more software vendors adapt to the increased adoption of virtualization, but it is important to check with your vendors to clearly understand how they view software use in a virtualized environment to a

- Possible learning curve: Implementing and managing a virtualized environment will require IT staff with expertise in virtualization. On the user side a typical virtual environment will operate similarly to the non-virtual environment. There are some applications that do not adapt well to the virtualized environment – this is something that your IT staff will need to be aware of and address prior to converting.

popular implementations and available tools for each level of visualization

Here’s my run-down of some of the best, most popular or most innovative data visualization tools available today. These are all paid-for (although they all offer free trials or personal-use licences). Look out for another post soon on completely free and open source alternatives.

Tableau

Tableau is often regarded as the grand master of data visualization software and for good reason. Tableau has a very large customer base of 57,000+ accounts across many industries due to its simplicity of use and ability to produce interactive visualizations far beyond those provided by general BI solutions. It is particularly well suited to handling the huge and very fast-changing datasets which are used in Big Data operations, including artificial intelligence and machine learning applications, thanks to integration with a large number of advanced database solutions including Hadoop, Amazon AWS, My SQL, SAP and Teradata. Extensive research and testing has gone into enabling Tableau to create graphics and visualizations as efficiently as possible, and to make them easy for humans to understand.

Qlikview

Qlik with their Qlikview tool is the other major player in this space and Tableau’s biggest competitor. The vendor has over 40,000 customer accounts across over 100 countries, and those that use it frequently cite its highly customizable setup and wide feature range as a key advantage. This however can mean that it takes more time to get to grips with and use it to its full potential. In addition to its data visualization capabilities Qlikview offers powerful business intelligence, analytics and enterprise reporting capabilities and I particularly like the clean and clutter-free user interface. Qlikview is commonly used alongside its sister package, Qliksense, which handles data exploration and discovery. There is also a strong community and there are plenty of third-party resources available online to help new users understand how to integrate it in their projects.

FusionCharts

This is a very widely-used, JavaScript-based charting and visualization package that has established itself as one of the leaders in the paid-for market. It can produce 90 different chart types and integrates with a large number of platforms and frameworks giving a great deal of flexibility. One feature that has helped make FusionCharts very popular is that rather than having to start each new visualization from scratch, users can pick from a range of “live” example templates, simply plugging in their own data sources as needed.

Highcharts

Like FusionCharts this also requires a licence for commercial use, although it can be used freely as a trial, non-commercial or for personal use. Its website claims that it is used by 72 of the world’s 100 largest companies and it is often chosen when a fast and flexible solution must be rolled out, with a minimum need for specialist data visualization training before it can be put to work. A key to its success has been its focus on cross-browser support, meaning anyone can view and run its interactive visualizations, which is not always true with newer platforms.

What is the hypervisor and what is the role of it?

A hypervisor or virtual machine monitor is computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine.

Understanding the Role of a Hypervisor

The explanation of a hypervisor up to this point has been fairly simple: it is a layer of software that sits between the hardware and the one or more virtual machines that it supports. Its job is also fairly simple. The three characteristics defined by Popek and Goldberg illustrate these tasks:

- Provide an environment identical to the physical environment

- Provide that environment with minimal performance cost

- Retain complete control of the system resources

How does the emulation is different from VMs?

Virtual machines make use of CPU self-virtualization, to whatever extent it exists, to provide a virtualized interface to the real hardware. Emulators emulate hardware without relying on the CPU being able to run code directly and redirect some operations to a hypervisor controlling the virtual container.

Compare and contrast the VMs and containers/dockers, indicating their advantages and disadvantages

Both VMs and containers can help get the most out of available computer hardware and software resources. Containers are the new kids on the block, but VMs have been, and continue to be, tremendously popular in data centers of all sizes.

If you’re looking for the best solution for running your own services in the cloud, you need to understand these virtualization technologies, how they compare to each other, and what are the best uses for each. Here’s our quick introduction.

Benefits of VMs

- All OS resources available to apps

- Established management tools

- Established security tools

- Better known security controls